Captured

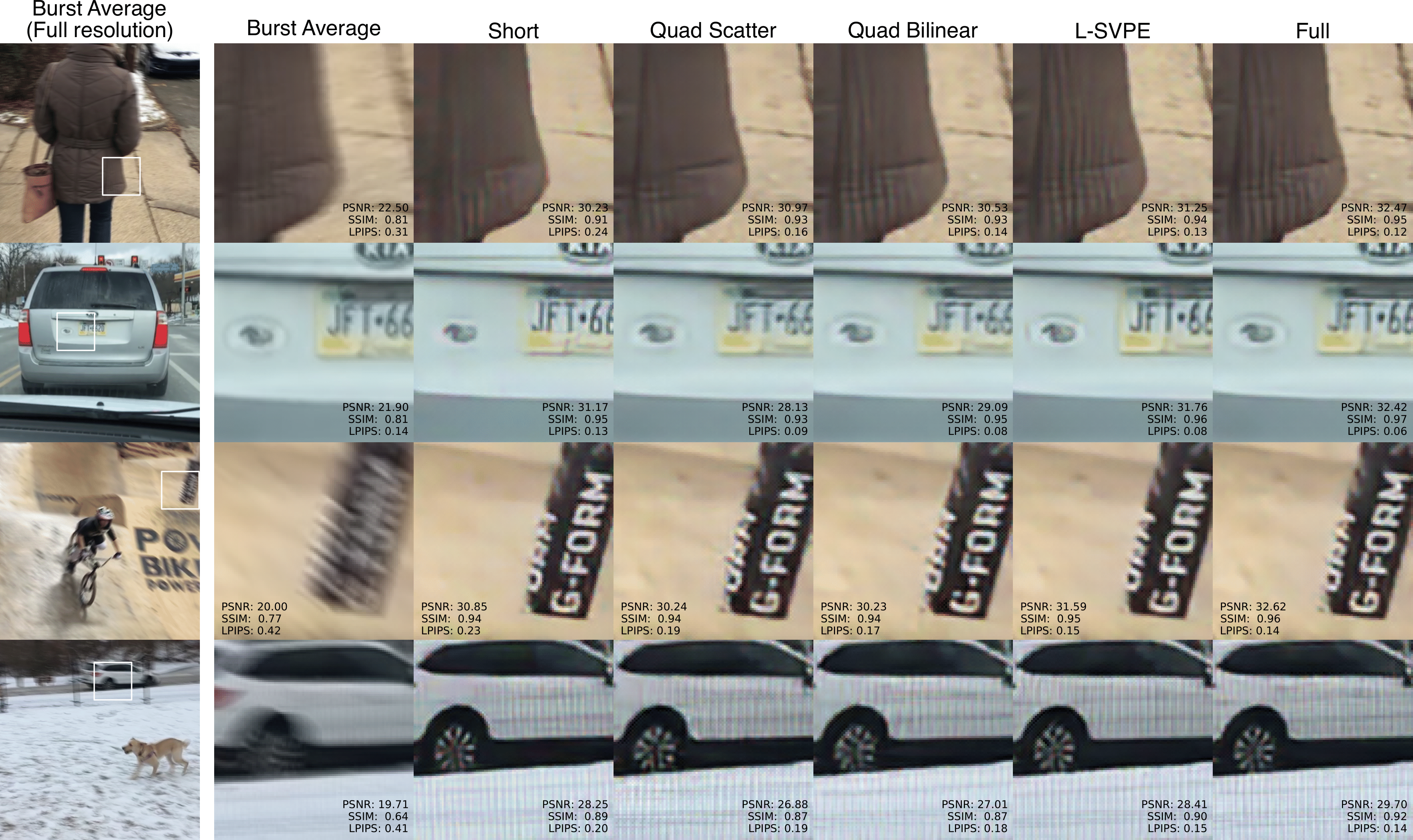

Captured results show how noisy and blurred global exposures such as Long and Short exposures can be.

Computationally removing the motion blur introduced by camera shake or object motion in a captured image remains a challenging task in computational photography.

Deblurring methods are often limited by the fixed global exposure time of the image capture process. The post-processing algorithm either must deblur a longer exposure that contains relatively little noise or denoise a short exposure that intentionally removes the opportunity for blur at the cost of increased noise.

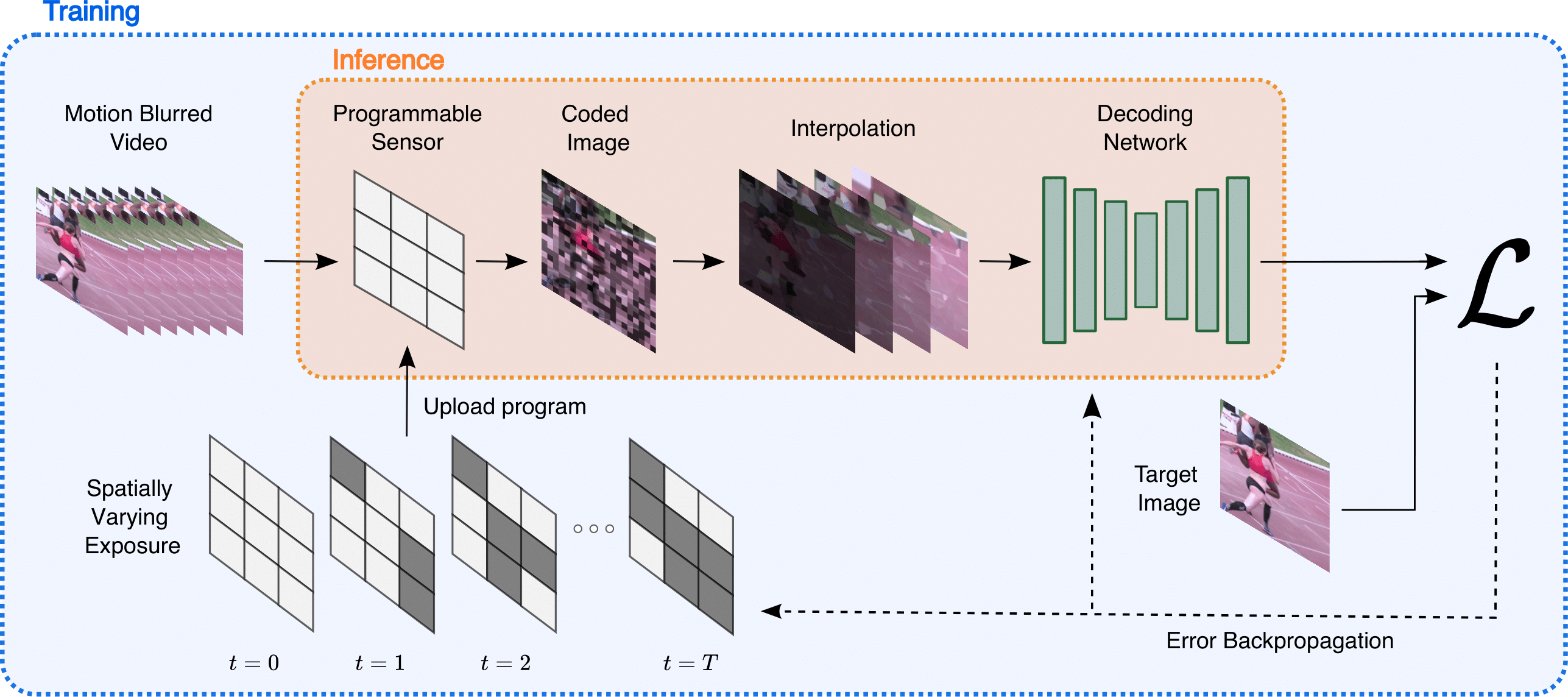

We present a novel approach of leveraging spatially varying pixel exposures for motion deblurring using next-generation focal-plane sensor--processors along with an end-to-end design of these exposures and a machine learning--based motion-deblurring framework. We demonstrate in simulation and a physical prototype that learned spatially varying pixel exposures (L-SVPE) can successfully deblur scenes while recovering high frequency detail.

We compare our method, L-SVPE, against other top performing baselines, including those that use different interpolation methods. Burst Average is the average of all frames, and Short uses a short global exposure. Quad Scatter and Quad Bilinear use the same quad sensor design and scatter or bilinear interpolation. Full uses a full stack of a short, medium, and long exposure.

If this work interested you, feel free to check out the following related works!

Neural Sensors uses learned coded exposures for HDR and video compressive imaging.

MantissaCam uses irradiance encoding to perform single snapshot HDR imaging.

Time Multiplexed Coded Apertures demonstrate the use of sub-exposures for compressive light field imaging and hyperspectral imaging.

More examples of end-to-end designed computational imaging include Depth from Defocus with Learned Optics and Deep Optics for Single-Shot HDR Imaging.

@article{nguyen2022learning,

author = {Nguyen, Cindy M and Martel, Julien NP and Wetzstein, Gordon},

title = {Learning Spatially Varying Pixel Exposures for Motion Deblurring},

journal = {arXiv preprint arXiv:2204.07267},

year = {2022},

}